Automated Qualitative Analysis with Natural Language Processing (2018)

Text responses in surveys often bring tremendous insight, but when there are a large number of comments, they really need to be categorized to be actionable. Categorizing them helps teams to understand the big picture and to correlate them to other data, but manual classification is very time-consuming. I created a natural language classifier to automatically categorize Net Promoter Score survey comments. The goal was to evaluate the effectiveness of automating categorization that could then be checked by a human reader.

Contributions: Subject matter expertise, natural language classifier training, analytics, education, documentation

Building Classifiers

IBM Cloud uses Net Promoter Score (NPS) surveys as a measure of customer experiences with the platform and services. The comments are full of fantastic insights but also hard to digest as the number increases.

Manual classification is time-consuming, so discovering trends can often take a long time. I undertook this project as an experiment to speed up categorization using the IBM Watson Natural Language Classifier. Machine classification is not perfect, but it is amazingly fast!

Background

Creating the classifiers consisted of three steps:

- Creating training and test data. I created training and test data using 1200+ responses by classifying NPS survey data comments according to categories already used by the team along with some new ones. The output was exported into a CSV file. Classifying the entire set (both training and test data) took me about a day.

- Building classifiers. I wrote Python code to train 8 binary classifiers in IBM Watson Natural Language Classifier.

- Testing classifiers and iterating. I wrote another Python script to evaluate the test data. Some classifiers required more data to improve the accuracy, so some required multiple iterations. For each run, my Python code did preliminary scoring and added those to a CSV file.

I was amazed to find that in about 10 minutes, the classifier did what took me half of a day!

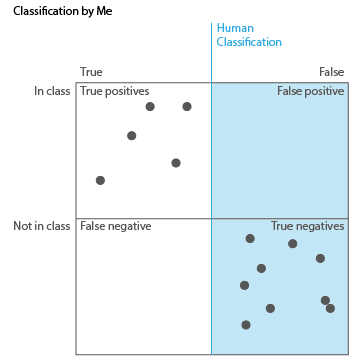

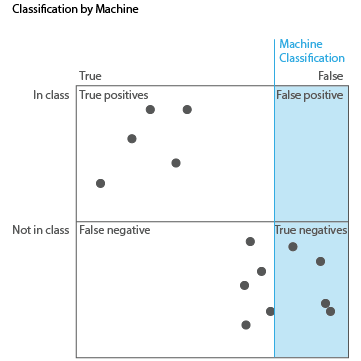

Machine classification showed few false positives, but it did show a higher number of false negatives, or misses. In other words, if it was placed in the category, it was probably correct, but it missed a number of comments that should have been included. The standard of "correctness" is the human classification.

Analytics Tooling

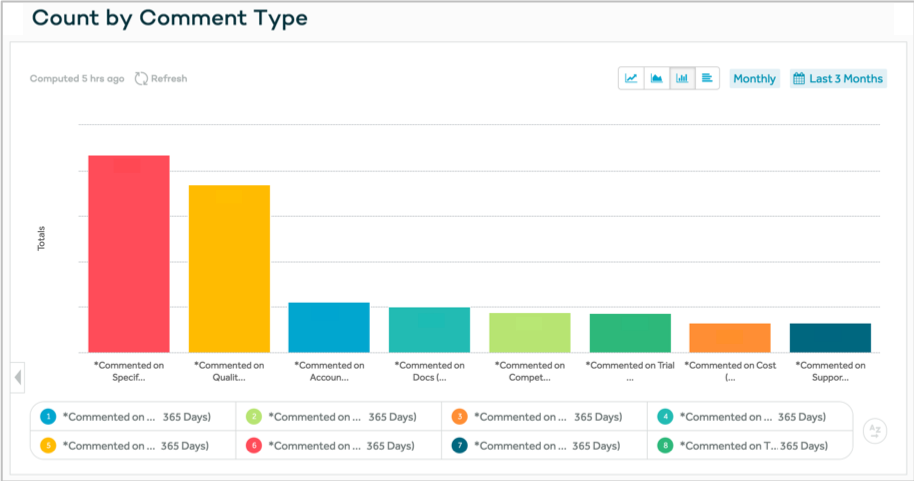

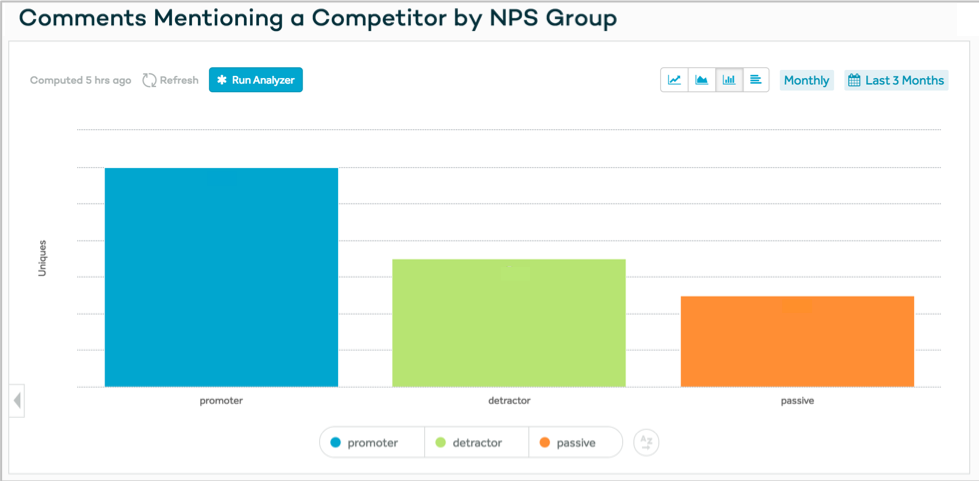

After creating classifiers, I worked with the development team to have the classified text integrated into our analytics tooling, enabling us to discover new insights. Now the team can see relationships between comment types and NPS scores, services used, retention and others.

Outcomes

"This is great information…NPS and all of the data around it can be quite daunting until you find out how to make that data work for you. The insights into how our clients truly use and feel about our offerings are invaluable. Combining information from [our tooling] will take you to the next level of understanding your clients and help you determine actions needed for improvement."

IBM Cloud NPS Program Manager

I created education sessions, blog posts, templates and examples to facilitate adoption, which generated a lot of interest. 50+ of my peers attended or watched the replay of the education session.

In the first six months, 1000+ comments were classified are are available in the analytics tooling. The classifiers generated about 8000+ queries and were incorporated into numerous dashboards.